Improving AI Models with Human-Validated Data

What is the Biggest Challenge in AI Model Training Today?

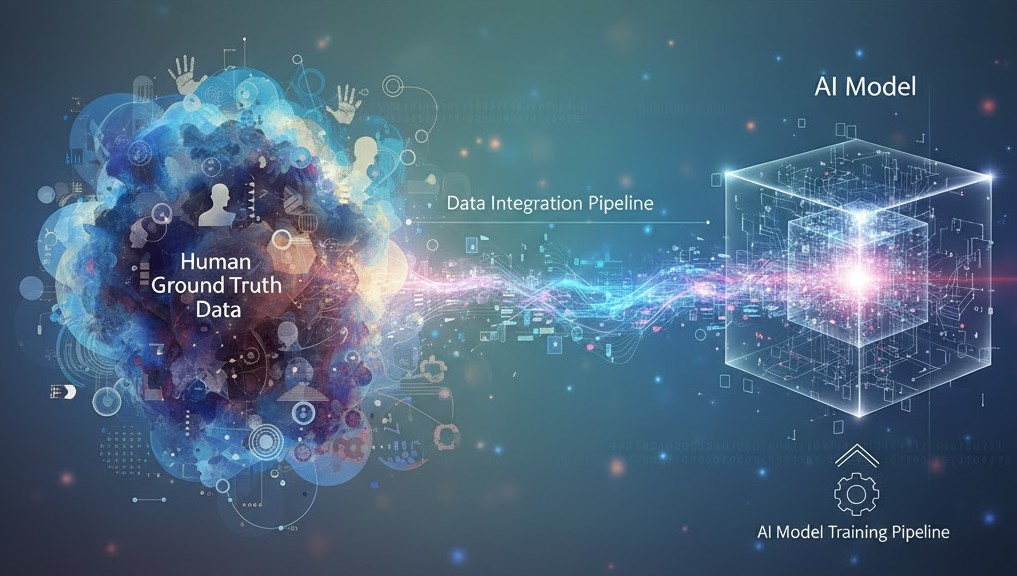

As AI development moves toward more complex architectures, the industry is facing a critical hurdle: Data Provenance and Quality. While many labs rely on web-scraped data, this approach often leads to “model collapse”—a phenomenon where AI begins to learn from synthetic, AI-generated content rather than authentic human input.

At American Directions Research Group (ADRG), we solve this by providing human ground truth. Through custom-commissioned data collection, we help AI developers augment and validate their models with fresh, 100% human-originated datasets.

How to Improve AI Model Performance with Human-Validated Data

1. Eliminating “Model Drift” with Fresh Data

Most Large Language Models (LLMs) have a “knowledge cutoff.” To remain relevant, these models require Temporal Data Augmentation. ADRG provides real-time data collection from current U.S. populations, allowing developers to:

- Validate model reasoning against current events.

- Update consumer sentiment benchmarks.

- Ensure model outputs align with real-world 2026 data.

2. Mitigating Algorithmic Bias through Representative Sampling

To mitigate algorithmic bias, ADRG provides statistically significant, representative human datasets. Web-scraped data is often skewed toward specific digital demographics. ADRG utilizes a multimodal approach (CATI, digital, and mail) to reach hard-to-find populations, ensuring your training data is:

- Demographically Diverse: Inclusive of rural, elderly, and niche socioeconomic groups.

- Statistically Significant: Moving beyond “noisy” web data to structured, scientific samples.

3. Improving RLHF with High-Fidelity Human Feedback

Reinforcement Learning from Human Feedback (RLHF) requires high-quality “Gold Standard” labels. ADRG’s professional interviewing infrastructure provides nuanced, high-reasoning responses that outperform low-cost, unverified data sources. This leads to better model alignment and more natural conversational AI.

The ADRG Advantage: Secure and Ethical Data Sourcing

In an era of increasing AI regulation, Data Ethics is no longer optional. ADRG ensures every data point is:

- Fully Consented: Zero-party data collected directly from respondents.

- Security Compliant: Collected within secure environments meeting the highest privacy standards.

- Audit-Ready: Providing clear provenance for “Responsible AI” documentation.

Frequently Asked Questions

How can I reduce bias in my AI model?

To reduce bias, developers must supplement web-scraped datasets with representative, human-originated data. Using a research partner like ADRG allows you to commission specific datasets from underrepresented demographics to balance your model’s “worldview.”

What is the difference between synthetic data and human ground truth?

Synthetic data is generated by an AI, which can lead to a “hallucination loop.” Human ground truth, collected on behalf of our clients by ADRG, results from direct human interaction, ensuring the model is grounded in real-world logic and authentic experience.

Why is commissioned data superior to web-scraped data for AI training?

Commissioned data is tailored to a specific use case, has a clear legal chain of custody (consent), and is free from the “noise” and bot-generated content prevalent on the open web.

What is Data Provenance in AI, and why does it matter?

Data Provenance refers to the documented origin and history of a dataset. In the context of “Responsible AI” and increasing regulation, provenance is critical for security and compliance. ADRG ensures audit-ready provenance by collecting fully-consented, zero-party data within secure environments, meeting the highest global privacy standards.

How does Human Feedback (RLHF) improve Large Language Models?

Reinforcement Learning from Human Feedback (RLHF) aligns LLM outputs with human intent. By using high-fidelity human feedback from professional interviewers rather than unverified low-cost sources, ADRG helps developers achieve better model reasoning, more natural conversational flows, and a significant reduction in harmful or inaccurate outputs.

Contact American Directions Research Group

Ready to optimize your AI pipeline with custom-commissioned human data? Contact our Data Partnerships Team to discuss your specific requirements for data augmentation, model validation, or RLHF support.